Source

Blocking or blacklisting an IP address is a useful way to thwart bad actors mis-using your API.

It turns out there’s a really easy way to block ip addresses in API gateway for your REST API.

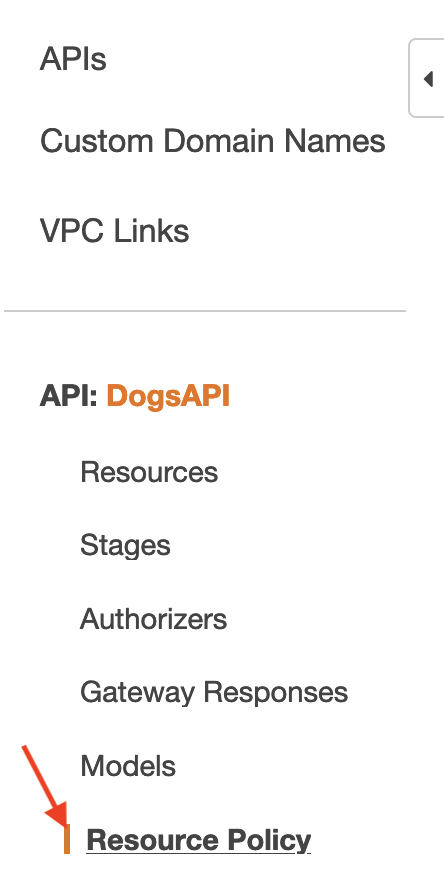

To get started, head over to the Resources tab of your REST API as seen below.

To blacklist or block an IP address, you want to enter the following IAM Policy Statement. Make sure to replace the IP address with the one you want to block.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": "*",

"Action": "execute-api:Invoke",

"Resource": "*"

},

{

"Effect": "Deny",

"Principal": "*",

"Action": "execute-api:Invoke",

"Resource": "*",

"Condition": {

"IpAddress": {

"aws:SourceIp": [

"IP_ADDRESS_TO_BLOCK_HERE"

]

}

}

}

]

}

This statement will allow users to invoke it, but block access to any ip addresses that you specify. Note that this is a list and you can add one or more ip addresses to block.

Note: to make the change live, you need to re-deploy your API to your stage. This can be done by navigating to the resource and clicking Deploy.

After a few minutes (it took 5 or so for it to reflect for me), the user will be denied all access to the API. An error message similar to the following will be shown to the user:

{

"Message": "User: anonymous is not authorized to perform: execute-api:Invoke on resource: arn:aws:execute-api:us-east-1:********5794:sg1v5qzfuk/test/GET/dogs with an explicit deny"

}

If you want to test this out, you can use this website to find your own IP and experiment with this feature.